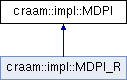

Represents an MDP with implementability constraints. More...

#include <ImMDP.hpp>

Public Member Functions | |

| MDPI (const shared_ptr< const MDP > &mdp, const indvec &state2observ, const Transition &initial) | |

| Constructs the MDP with implementability constraints. More... | |

| MDPI (const MDP &mdp, const indvec &state2observ, const Transition &initial) | |

| Constructs the MDP with implementability constraints. More... | |

| size_t | obs_count () const |

| size_t | state_count () const |

| long | state2obs (long state) |

| size_t | action_count (long obsid) |

| indvec | obspol2statepol (const indvec &obspol) const |

| Converts a policy defined in terms of observations to a policy defined in terms of states. More... | |

| void | obspol2statepol (const indvec &obspol, indvec &statepol) const |

| Converts a policy defined in terms of observations to a policy defined in terms of states. More... | |

| Transition | transition2obs (const Transition &tran) |

| Converts a transition from states to observations, adding probabilities of individual states. More... | |

| shared_ptr< const MDP > | get_mdp () |

| Internal MDP representation. | |

| Transition | get_initial () const |

| Initial distribution of MDP. | |

| indvec | random_policy (random_device::result_type seed=random_device{}()) |

| Constructs a random observation policy. | |

| prec_t | total_return (prec_t discount, prec_t precision=SOLPREC) const |

| Computes a return of an observation policy. More... | |

| void | to_csv (ostream &output_mdp, ostream &output_state2obs, ostream &output_initial, bool headers=true) const |

| Saves the MDPI to a set of 3 csv files, for transitions, observations, and the initial distribution. More... | |

| void | to_csv_file (const string &output_mdp, const string &output_state2obs, const string &output_initial, bool headers=true) const |

| Saves the MDPI to a set of 3 csv files, for transitions, observations, and the initial distribution. More... | |

Static Public Member Functions | |

| template<typename T = MDPI> | |

| static unique_ptr< T > | from_csv (istream &input_mdp, istream &input_state2obs, istream &input_initial, bool headers=true) |

| Loads an MDPI from a set of 3 csv files, for transitions, observations, and the initial distribution. More... | |

| template<typename T = MDPI> | |

| static unique_ptr< T > | from_csv_file (const string &input_mdp, const string &input_state2obs, const string &input_initial, bool headers=true) |

Static Protected Member Functions | |

| static void | check_parameters (const MDP &mdp, const indvec &state2observ, const Transition &initial) |

| Checks whether the parameters are correct. More... | |

Protected Attributes | |

| shared_ptr< const MDP > | mdp |

| the underlying MDP | |

| indvec | state2observ |

| maps index of a state to the index of the observation | |

| Transition | initial |

| initial distribution | |

| long | obscount |

| number of observations | |

| indvec | action_counts |

| number of actions for each observation | |

Detailed Description

Represents an MDP with implementability constraints.

Consists of an MDP and a set of observations.

Constructor & Destructor Documentation

◆ MDPI() [1/2]

|

inline |

Constructs the MDP with implementability constraints.

This constructor makes it possible to share the MDP with other data structures.

Important: when the underlying MDP changes externally, the object becomes invalid and may result in unpredictable behavior.

- Parameters

-

mdp A non-robust base MDP model. state2observ Maps each state to the index of the corresponding observation. A valid policy will take the same action in all states with a single observation. The index is 0-based. initial A representation of the initial distribution. The rewards in this transition are ignored (and should be 0).

◆ MDPI() [2/2]

|

inline |

Constructs the MDP with implementability constraints.

The MDP model is copied (using the copy constructor) and stored internally.

- Parameters

-

mdp A non-robust base MDP model. It cannot be shared to prevent direct modification. state2observ Maps each state to the index of the corresponding observation. A valid policy will take the same action in all states with a single observation. The index is 0-based. initial A representation of the initial distribution. The rewards in this transition are ignored (and should be 0).

Member Function Documentation

◆ check_parameters()

|

inlinestaticprotected |

Checks whether the parameters are correct.

Throws an exception if the parameters are wrong.

◆ from_csv()

|

inlinestatic |

Loads an MDPI from a set of 3 csv files, for transitions, observations, and the initial distribution.

The MDP size is defined by the transitions file.

- Parameters

-

input_mdp File name for transition probabilities and rewards input_state2obs File name for mapping states to observations input_initial File name for initial distribution

◆ obspol2statepol() [1/2]

Converts a policy defined in terms of observations to a policy defined in terms of states.

- Parameters

-

obspol Policy that maps observations to actions to take

- Returns

- Observation policy

◆ obspol2statepol() [2/2]

Converts a policy defined in terms of observations to a policy defined in terms of states.

- Parameters

-

obspol Policy that maps observations to actions to take statepol State policy target

◆ to_csv()

|

inline |

Saves the MDPI to a set of 3 csv files, for transitions, observations, and the initial distribution.

- Parameters

-

output_mdp Transition probabilities and rewards output_state2obs Mapping states to observations output_initial Initial distribution

◆ to_csv_file()

|

inline |

Saves the MDPI to a set of 3 csv files, for transitions, observations, and the initial distribution.

- Parameters

-

output_mdp File name for transition probabilities and rewards output_state2obs File name for mapping states to observations output_initial File name for initial distribution

◆ total_return()

Computes a return of an observation policy.

- Parameters

-

discount Discount factor

- Returns

- Discounted return of the policy

◆ transition2obs()

|

inline |

Converts a transition from states to observations, adding probabilities of individual states.

Rewards are a convex combination of the original values.

The documentation for this class was generated from the following file:

- craam/ImMDP.hpp

1.8.13

1.8.13