The class abstracts some operations of value / policy iteration in order to generalize to various types of robust MDPs. More...

#include <robust_values.hpp>

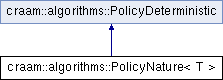

Inheritance diagram for craam::algorithms::PolicyNature< T >:

Public Types | |

| using | solution_type = SolutionRobust |

Public Types inherited from craam::algorithms::PolicyDeterministic Public Types inherited from craam::algorithms::PolicyDeterministic | |

| using | solution_type = Solution |

Public Member Functions | |

| PolicyNature (indvec policy, vector< NatureInstance< T >> natspec) | |

| Constructs the object from a policy and a specification of nature. | |

| PolicyNature (vector< NatureInstance< T >> natspec) | |

| Constructs the object from a policy and a specification of nature. | |

| SolutionRobust | new_solution (size_t statecount, numvec valuefunction) const |

| Constructs a new robust solution. | |

| template<class SType > | |

| prec_t | update_solution (SolutionRobust &solution, const SType &state, long stateid, const numvec &valuefunction, prec_t discount) const |

| Computes the Bellman update and updates the solution to the best response It does not update the value function. More... | |

| template<class SType > | |

| prec_t | update_value (const SolutionRobust &solution, const SType &state, long stateid, const numvec &valuefunction, prec_t discount) const |

| Computes a fixed Bellman update using the current solution policy. More... | |

Public Member Functions inherited from craam::algorithms::PolicyDeterministic Public Member Functions inherited from craam::algorithms::PolicyDeterministic | |

| PolicyDeterministic () | |

| All actions will be optimized. | |

| PolicyDeterministic (indvec policy) | |

| A partial policy that can be used to fix some actions policy[s] = -1 means that the action should be optimized in the state policy of length 0 means that all actions will be optimized. | |

| Solution | new_solution (size_t statecount, numvec valuefunction) const |

| template<class SType > | |

| prec_t | update_solution (Solution &solution, const SType &state, long stateid, const numvec &valuefunction, prec_t discount) const |

| Computed the Bellman update and updates the solution to the best response It does not update the value function. More... | |

| template<class SType > | |

| prec_t | update_value (const Solution &solution, const SType &state, long stateid, const numvec &valuefunction, prec_t discount) const |

| Computes a fixed Bellman update using the current solution policy. More... | |

Public Attributes | |

| vector< NatureInstance< T > > | natspec |

| Specification of natures response (the function that nature computes, could be different for each state) | |

Public Attributes inherited from craam::algorithms::PolicyDeterministic Public Attributes inherited from craam::algorithms::PolicyDeterministic | |

| indvec | policy |

| Partial policy specification (action -1 is ignored and optimized) | |

Additional Inherited Members | |

Protected Member Functions inherited from craam::algorithms::PolicyDeterministic Protected Member Functions inherited from craam::algorithms::PolicyDeterministic | |

| void | process_valuefunction (size_t statecount, numvec &valuefunction) const |

| indvec | process_policy (size_t statecount) const |

Detailed Description

template<class T>

class craam::algorithms::PolicyNature< T >

The class abstracts some operations of value / policy iteration in order to generalize to various types of robust MDPs.

It can be used in place of response in mpi_jac or vi_gs to solve robust MDP objectives.

Member Function Documentation

◆ update_solution()

template<class T>

template<class SType >

|

inline |

Computes the Bellman update and updates the solution to the best response It does not update the value function.

- Returns

- New value for the state

◆ update_value()

template<class T>

template<class SType >

|

inline |

Computes a fixed Bellman update using the current solution policy.

- Returns

- New value for the state

The documentation for this class was generated from the following file:

- craam/algorithms/robust_values.hpp

1.8.13

1.8.13