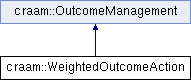

An action in a robust MDP that allows for outcomes chosen by nature. More...

#include <Action.hpp>

Public Member Functions | |

| WeightedOutcomeAction () | |

| Creates an empty action. More... | |

| WeightedOutcomeAction (const vector< Transition > &outcomes) | |

| Initializes outcomes to the provided vector. | |

| Transition & | create_outcome (long outcomeid) override |

| Adds a sufficient number (or 0) of empty outcomes/transitions for the provided outcomeid to be a valid identifier. More... | |

| Transition & | create_outcome (long outcomeid, prec_t weight) |

| Adds a sufficient number of empty outcomes/transitions for the provided outcomeid to be a valid identifier. More... | |

| void | set_distribution (const numvec &distribution) |

| Sets the base distribution over the outcomes. More... | |

| void | set_distribution (long outcomeid, prec_t weight) |

| Sets weight for a particular outcome. More... | |

| const numvec & | get_distribution () const |

| Returns the baseline distribution over outcomes. More... | |

| void | normalize_distribution () |

| Normalizes outcome weights to sum to one. More... | |

| bool | is_distribution_normalized () const |

| Checks whether the outcome distribution is normalized. | |

| void | uniform_distribution () |

| Sets an initial uniform value for the distribution. More... | |

| void | to_string (string &result) const |

| Appends a string representation to the argument. | |

| prec_t | mean_reward (const numvec &outcomedist) const |

| Returns the mean reward from the transition for the provided nature action. More... | |

| prec_t | mean_reward () const |

| Returns the mean reward from the transition using the nominal distribution on outcomes. More... | |

| Transition | mean_transition (const numvec &outcomedist) const |

| Returns the mean transition probabilities for the provided nature action. More... | |

| Transition | mean_transition () const |

| Returns the mean transition probabilities using the nominal distribution on outcomes. More... | |

| string | to_json (long actionid=-1) const |

| Returns a json representation of action. More... | |

Public Member Functions inherited from craam::OutcomeManagement Public Member Functions inherited from craam::OutcomeManagement | |

| OutcomeManagement () | |

| Empty list of outcomes. | |

| OutcomeManagement (const vector< Transition > &outcomes) | |

| Initializes with a list of outcomes. | |

| virtual | ~OutcomeManagement () |

| Empty virtual destructor. | |

| virtual Transition & | create_outcome () |

| Creates a new outcome at the end. More... | |

| const Transition & | get_outcome (long outcomeid) const |

| Returns a transition for the outcome. More... | |

| Transition & | get_outcome (long outcomeid) |

| Returns a transition for the outcome. More... | |

| const Transition & | operator[] (long outcomeid) const |

| Returns a transition for the outcome. More... | |

| Transition & | operator[] (long outcomeid) |

| Returns a transition for the outcome. More... | |

| size_t | outcome_count () const |

| Returns number of outcomes. More... | |

| size_t | size () const |

| Returns number of outcomes. More... | |

| void | add_outcome (long outcomeid, const Transition &t) |

| Adds an outcome defined by the transition. More... | |

| void | add_outcome (const Transition &t) |

| Adds an outcome defined by the transition as the last outcome. More... | |

| const vector< Transition > & | get_outcomes () const |

| Returns the list of outcomes. | |

| void | normalize () |

| Normalizes transitions for outcomes. | |

| bool | is_nature_correct (numvec oid) const |

| Whether the provided outcomeid is correct. | |

| void | to_string (string &result) const |

| Appends a string representation to the argument. | |

Protected Attributes | |

| numvec | distribution |

| Weights used in computing the worst/best case. | |

Protected Attributes inherited from craam::OutcomeManagement Protected Attributes inherited from craam::OutcomeManagement | |

| vector< Transition > | outcomes |

| List of possible outcomes. | |

Detailed Description

An action in a robust MDP that allows for outcomes chosen by nature.

This type of action represents a zero-sum game with the decision maker choosing an action and nature choosing a distribution over outcomes. This action can be used by both regular and robust MDP algorithms.

The distribution d over outcomes is uniform by default: see WeightedOutcomeAction::create_outcome.

Constructor & Destructor Documentation

◆ WeightedOutcomeAction()

|

inline |

Creates an empty action.

Member Function Documentation

◆ create_outcome() [1/2]

|

inlineoverridevirtual |

Adds a sufficient number (or 0) of empty outcomes/transitions for the provided outcomeid to be a valid identifier.

This override also properly resizing the nominal outcome distribution and reweighs is accordingly.

If the corresponding outcome already exists, then it just returns it.

The baseline distribution value for the new outcome(s) are set to be:

\[ d_n' = \frac{1}{n+1}, \]

where \( n \) is the new outcomeid. Weights for existing outcomes (if non-zero) are scaled appropriately to sum to a value that would be equal to a sum of uniformly distributed values:

\[ d_i' = d_i \frac{m \frac{1}{n+1}}{ \sum_{i=0}^{m} d_i }, \; i = 0 \ldots m \]

where \( m \) is the previously maximal outcomeid; \( d_i' \) and \( d_i \) are the new and old weights of the outcome \( i \) respectively. If the outcomes \( i < n\) do not exist they are created with uniform weight. This constructs a uniform distribution of the outcomes by default.

An exception during the computation may leave the distribution in an incorrect state.

- Parameters

-

outcomeid Index of outcome to create

- Returns

- Transition that corresponds to outcomeid

Reimplemented from craam::OutcomeManagement.

◆ create_outcome() [2/2]

|

inline |

Adds a sufficient number of empty outcomes/transitions for the provided outcomeid to be a valid identifier.

The weights of new outcomes < outcomeid are set to 0. This operation does rescale weights in order to preserve their sum.

If the outcome already exists, its nominal weight is overwritten.

Note that this operation may leave the action in an invalid state in which the nominal outcome distribution does not sum to 1.

- Parameters

-

outcomeid Index of outcome to create weight New nominal weight for the outcome.

- Returns

- Transition that corresponds to outcomeid

◆ get_distribution()

|

inline |

Returns the baseline distribution over outcomes.

◆ mean_reward() [1/2]

Returns the mean reward from the transition for the provided nature action.

◆ mean_reward() [2/2]

|

inline |

Returns the mean reward from the transition using the nominal distribution on outcomes.

◆ mean_transition() [1/2]

|

inline |

Returns the mean transition probabilities for the provided nature action.

◆ mean_transition() [2/2]

|

inline |

Returns the mean transition probabilities using the nominal distribution on outcomes.

◆ normalize_distribution()

|

inline |

Normalizes outcome weights to sum to one.

Assumes that the distribution is initialized. Exception is thrown if the distribution sums to zero.

◆ set_distribution() [1/2]

|

inline |

Sets the base distribution over the outcomes.

The function check for correctness of the distribution.

- Parameters

-

distribution New distribution of outcomes.

◆ set_distribution() [2/2]

|

inline |

Sets weight for a particular outcome.

The function does not check for correctness of the distribution.

- Parameters

-

distribution New distribution of outcomes. weight New weight

◆ to_json()

|

inline |

Returns a json representation of action.

- Parameters

-

actionid Includes also action id

◆ uniform_distribution()

|

inline |

Sets an initial uniform value for the distribution.

If the distribution already exists, then it is overwritten.

The documentation for this class was generated from the following file:

- craam/Action.hpp

1.8.13

1.8.13