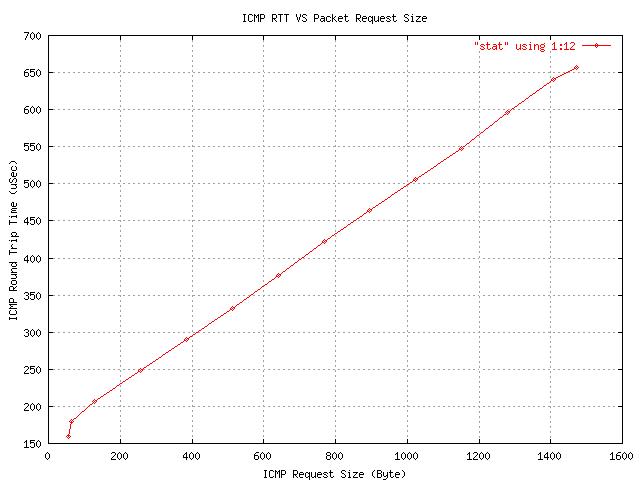

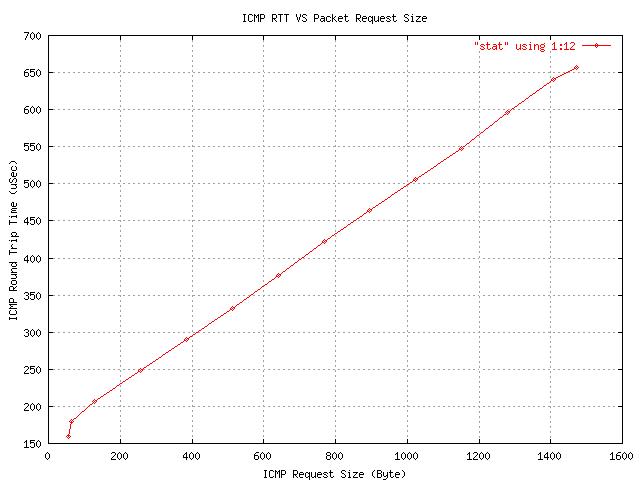

For a postscript version of this picture: here its gnuplot script here, the stat is here

Experiments on the delay shows that the actual delay by the line and

router should be close to 72 uSec

Infrastructure here

madrid$ ping -U -c 12 -s requestSize 192.168.2.2

|

ICMP RequestSize |

56byte |

64Byte |

128Byte |

256byte |

384Byte |

512Byte |

640byte |

768Byte |

896Byte |

1024Byte |

1152 Byte |

1280Byte |

1408Byte |

1472Byte |

|

1st |

158 |

173 |

197 |

255 |

291 |

351 |

379 |

420 |

473 |

507 |

570 |

587 |

642 |

659 |

|

2nd |

150 |

182 |

222 |

245 |

282 |

333 |

370 |

410 |

453 |

496 |

541 |

593 |

629 |

660 |

|

3rd |

160 |

173 |

197 |

257 |

288 |

327 |

379 |

421 |

468 |

528 |

558 |

636 |

649 |

651 |

|

4th |

174 |

195 |

224 |

239 |

284 |

337 |

376 |

410 |

464 |

501 |

538 |

597 |

666 |

659 |

|

5th |

160 |

176 |

202 |

244 |

311 |

321 |

376 |

451 |

468 |

518 |

549 |

585 |

645 |

651 |

|

6th |

151 |

189 |

204 |

244 |

286 |

329 |

367 |

413 |

482 |

494 |

538 |

595 |

629 |

676 |

|

7th |

163 |

172 |

193 |

263 |

303 |

321 |

375 |

421 |

463 |

506 |

550 |

583 |

639 |

647 |

|

8th |

159 |

179 |

202 |

250 |

284 |

332 |

371 |

420 |

453 |

500 |

540 |

600 |

632 |

658 |

|

9th |

157 |

177 |

210 |

247 |

295 |

335 |

399 |

447 |

461 |

508 |

562 |

593 |

651 |

650 |

|

10th |

160 |

182 |

215 |

239 |

283 |

332 |

373 |

410 |

453 |

497 |

537 |

591 |

627 |

658 |

|

Average |

159.2 |

179.8 |

206.6 |

248.3 |

290.7 |

331.8 |

376.5 |

422.3 |

463.8 |

505.5 |

548.3 |

596 |

640.9 |

656.9 |

|

Variation |

4.2 |

5.76 |

8.92 |

6.36 |

7.44 |

5.84 |

5.5 |

10.68 |

7.2 |

7.9 |

9.5 |

9 |

9.7 |

5.72 |

Experiments Raw data:

For a postscript version of this picture: here

its gnuplot script here, the stat is

here

We can use the line passing point(256,248.3) and point(1408,640.9) to

approximate this line.

RTT = a * Request_Size + b

so

248.3 = a*256 + b

640.9 = a*1408 + b

Solve the above equations, we can get a = 0.3408 , b = 161.0556

Theoretically,

RTT/2 = delay + 2*(Packet_Size/Rate)

(Packet_Size = Request_size + ICMP header(8) + IP header (20) + Ethernet Wrapper(26) )

RTT = 2*delay + 4*( (Request_Size+8+20+26)/Rate )

RTT = Request_Size * ( 4/Rate ) + ( 2*delay + 216/Rate )

Where Rate = 100Mb/sec = 12.5MB/Sec = 12.5 B/uSec

4/Rate = 4/12.5 = 0.32 which is very close to the data calculated from the experiment 0.3408

b = 2*delay + 216/Rate = 161.0566

So delay = (161.0556 - 216/12.5)/2 = 71.8878 (uSec)=72 uSec

Why the result is far from the one we got in previous experiment (145 uSec )??

Possible Reason.

1. In previous calculation, the time used to copy data from kernel to device (or from device to kernel) is actually Sending/Receiving time. And the cable length is only 3 meters, cable delay is 3/(3*10**8)=0.01uSec. The Sending/Receiving time is 1526*8/(100*10**6)=122.08 uSec. So before the sending machine ends sending the packet, the receiving machines has already started receiving the same packet. i,e. There is overhead between sending and receiving time.

2. When we say the delay time of a specific infrastructure, this delay time should be a constant. In ideal case, the delay should be the time that a single bit travels from the source host to the destination host. It should not depend on the packet length. So the previous calculation using the time used to copy data from kernel to device (or from device to kernel) is not right.